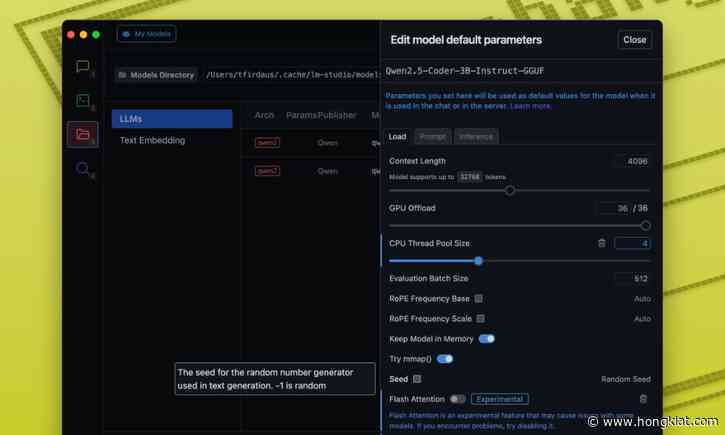

Running large language models (LLMs) locally with tools like LM Studio or Ollama has many advantages, including privacy, lower costs, and offline availability. However, these models can be resource-intensive and require proper optimization to run efficiently.

In this article, we will walk you through optimizing your setup, and in this case, we will be using LM Studio to make things a bit easier with its user-friendly interface and easy installation. We’ll be covering model selection and so

✗ Close categories

Ice Bucket Challenge

Ice Bucket Challenge

Animals

Animals

Apple

Apple

Apps & Smartphones

Apps & Smartphones

Artificial Intelligence

Artificial Intelligence

Arts

Arts

AT&T Inc.

AT&T Inc.

Bank of America

Bank of America

Berkshire Hathaway

Berkshire Hathaway

Business

Business

Cardinal Health Inc

Cardinal Health Inc

Cars

Cars

Celebrity

Celebrity

Chevron Corporation

Chevron Corporation

Child Care

Child Care

Christian News

Christian News

Christian Videos

Christian Videos

Chrysler Group

Chrysler Group

Citigroup

Citigroup

ConocoPhillips Company

ConocoPhillips Company

Conspiracy Channels

Conspiracy Channels

Conspiracy theories

Conspiracy theories

Conspiracy Videos

Conspiracy Videos

Consumer

Consumer

Cooking & Recipes

Cooking & Recipes

Coronavirus

Coronavirus

Costco Wholesale Corporation

Costco Wholesale Corporation

Craigslist Inc.

Craigslist Inc.

Cryptocurrency

Cryptocurrency

Culture & Media

Culture & Media

CVS Caremark Corporation

CVS Caremark Corporation

Dance music & news

Dance music & news

DIY

DIY

Ebola

Ebola

Electronics

Electronics

Entertainment

Entertainment

Exploding Kittens

Exploding Kittens

Express- Scripts- Holding- Company

Express- Scripts- Holding- Company

Exxon Mobil Corporation

Exxon Mobil Corporation

Facebook Inc.

Facebook Inc.

Fashion

Fashion

Federal National Mortgage Assocation

Federal National Mortgage Assocation

Films/Movies & reviews

Films/Movies & reviews

Finance

Finance

Flat Earth News

Flat Earth News

Food

Food

Ford Motor Company

Ford Motor Company

Funny videos

Funny videos

Gadgets

Gadgets

games

games

General Electric Company

General Electric Company

General Motors

General Motors

General News

General News

Google Inc.

Google Inc.

Gossip news

Gossip news

Gourmet

Gourmet

Gulden

Gulden

Health

Health

Hewlett-Packard Company

Hewlett-Packard Company

Home

Home

ID&T

ID&T

International Business Machines Corporation

International Business Machines Corporation

International Crime

International Crime

JPMorgan Chase & Co

JPMorgan Chase & Co

Kepler-186f planet

Kepler-186f planet

Leisure time

Leisure time

Leisure trends

Leisure trends

Life & Style

Life & Style

Literature

Literature

Maranon Hammocks

Maranon Hammocks

Marathon Petroleum Corporation

Marathon Petroleum Corporation

McDonald's

McDonald's

McKesson Corporation

McKesson Corporation

Medical

Medical

Microsoft Corporation

Microsoft Corporation

Movies

Movies

Music

Music

News videos

News videos

Obituaries

Obituaries

Olympic Games

Olympic Games

Organic food

Organic food

Politics

Politics

Quantum Computing

Quantum Computing

Reddit

Reddit

Royal Dutch Shell

Royal Dutch Shell

Sci-Tech

Sci-Tech

Social media

Social media

social media news

social media news

Technology

Technology

Television

Television

The Kroger Company

The Kroger Company

The Walking Dead

The Walking Dead

Top 25 Zoos

Top 25 Zoos

Top10 twitter accounts

Top10 twitter accounts

Traffic

Traffic

Travel

Travel

Twitter Inc

Twitter Inc

UFO News and more

UFO News and more

US Elections

US Elections

Vacancies

Vacancies

Valero Energy Corporation

Valero Energy Corporation

Verizon Communications Inc.

Verizon Communications Inc.

Virtual & Augmented Reality

Virtual & Augmented Reality

Walmart

Walmart

Weather

Weather

Web design

Web design

White House

White House

Woman - Best beauty products & news

Woman - Best beauty products & news

Woman - Feminism & world's most powerful women

Woman - Feminism & world's most powerful women

Woman - Food & recipes

Woman - Food & recipes

Woman - Hair ideas & tips

Woman - Hair ideas & tips

Woman - Interior & assecoires

Woman - Interior & assecoires

Woman - Latest fashion trends

Woman - Latest fashion trends

Woman - Make-up ideas & tips

Woman - Make-up ideas & tips

Woman - Pregnancy & childbirth

Woman - Pregnancy & childbirth

Woman - Relationship tips & advice

Woman - Relationship tips & advice

Woman - Skin care tips

Woman - Skin care tips

Woman - Weight loss & healty recipes

Woman - Weight loss & healty recipes

Women - Breast cancer

Women - Breast cancer

Women - Most luxurious jewelry brands & news

Women - Most luxurious jewelry brands & news

Women - Beauty salon & spa

Women - Beauty salon & spa

Women - Sexy & luxury lingerie

Women - Sexy & luxury lingerie

World News

World News

Youth & Parenting

Youth & Parenting

✗ Close categories

✗ Close categories

✗ Close categories

Alexandr Dolgopolov

Alexandr Dolgopolov

American Football

American Football

Andy Murray

Andy Murray

Athletics

Athletics

Badmington

Badmington

Baseball

Baseball

Baseball - Arizona Diamondbacks

Baseball - Arizona Diamondbacks

Baseball - Atlanta Braves

Baseball - Atlanta Braves

Baseball - Baltimore Orioles

Baseball - Baltimore Orioles

Baseball - Boston Red Sox

Baseball - Boston Red Sox

Baseball - Chicago Cubs

Baseball - Chicago Cubs

Baseball - Chicago White Sox

Baseball - Chicago White Sox

Baseball - Cincinnati Reds

Baseball - Cincinnati Reds

Baseball - Cleveland Indians

Baseball - Cleveland Indians

Baseball - Colorado Rockies

Baseball - Colorado Rockies

Baseball - Detroit Tigers

Baseball - Detroit Tigers

Baseball - Houston Astros

Baseball - Houston Astros

Baseball - Kansas City Royals

Baseball - Kansas City Royals

Baseball - Los Angeles Angels of Anaheim

Baseball - Los Angeles Angels of Anaheim

Baseball - Los Angeles Dodgers

Baseball - Los Angeles Dodgers

Baseball - Miami Marlins

Baseball - Miami Marlins

Baseball - Milwaukee Brewers

Baseball - Milwaukee Brewers

Baseball - Minnesota Twins

Baseball - Minnesota Twins

Baseball - New York Mets

Baseball - New York Mets

Baseball - New York Yankees

Baseball - New York Yankees

Baseball - Oakland Athletics

Baseball - Oakland Athletics

Baseball - Philadelphia Phillies

Baseball - Philadelphia Phillies

Baseball - Pittsburgh Pirates

Baseball - Pittsburgh Pirates

Baseball - San Diego Padres

Baseball - San Diego Padres

Baseball - San Francisco Giants

Baseball - San Francisco Giants

Baseball - Seattle Mariners

Baseball - Seattle Mariners

Baseball - St. Louis Cardinals

Baseball - St. Louis Cardinals

Baseball - Tampa Bay Rays

Baseball - Tampa Bay Rays

Baseball - Texas Rangers

Baseball - Texas Rangers

Baseball - Toronto Blue Jays

Baseball - Toronto Blue Jays

Baseball - Washington Nationals

Baseball - Washington Nationals

Basketball

Basketball

Basketball - Atlanta Hawks

Basketball - Atlanta Hawks

Basketball - Boston Celtics

Basketball - Boston Celtics

Basketball - Brooklyn Nets

Basketball - Brooklyn Nets

Basketball - Charlotte Hornets

Basketball - Charlotte Hornets

Basketball - Chicago Bulls

Basketball - Chicago Bulls

Basketball - Cleveland Cavaliers

Basketball - Cleveland Cavaliers

Basketball - Dallas Mavericks

Basketball - Dallas Mavericks

Basketball - Denver Nuggets

Basketball - Denver Nuggets

Basketball - Detroit Pistons

Basketball - Detroit Pistons

Basketball - Golden State Warriors

Basketball - Golden State Warriors

Basketball - Houston Rockets

Basketball - Houston Rockets

Basketball - Indiana Pacers

Basketball - Indiana Pacers

Basketball - Los Angeles Clippers

Basketball - Los Angeles Clippers

Basketball - Los Angeles Lakers

Basketball - Los Angeles Lakers

Basketball - Memphis Grizzlies

Basketball - Memphis Grizzlies

Basketball - Miami Heat

Basketball - Miami Heat

Basketball - Milwaukee Bucks

Basketball - Milwaukee Bucks

Basketball - Minnesota Timberwolves

Basketball - Minnesota Timberwolves

Basketball - New Orleans Pelicans

Basketball - New Orleans Pelicans

Basketball - New York Knicks

Basketball - New York Knicks

Basketball - Oklahoma City Thunder

Basketball - Oklahoma City Thunder

Basketball - Orlando Magic

Basketball - Orlando Magic

Basketball - Philadelphia 76ers

Basketball - Philadelphia 76ers

Basketball - Phoenix Suns

Basketball - Phoenix Suns

Basketball - Portland Trail Blazers

Basketball - Portland Trail Blazers

Basketball - Sacramento Kings

Basketball - Sacramento Kings

Basketball - San Antonio Spurs

Basketball - San Antonio Spurs

Basketball - Toronto Raptors

Basketball - Toronto Raptors

Basketball - Utah Jazz

Basketball - Utah Jazz

Basketball - Washington Wizards

Basketball - Washington Wizards

Body building

Body building

Boxing

Boxing

Cheerleading

Cheerleading

Cricket

Cricket

Cycling

Cycling

Dancing

Dancing

David Ferrer

David Ferrer

David Goffin

David Goffin

Downhill Skiing

Downhill Skiing

Ernests Gulbis

Ernests Gulbis

Fabio Fognini

Fabio Fognini

Feliciano Lopez

Feliciano Lopez

Figure Skating

Figure Skating

Football - Arizona Cardinals

Football - Arizona Cardinals

Football - Atlanta Falcons

Football - Atlanta Falcons

Football - Baltimore Ravens

Football - Baltimore Ravens

Football - Buffalo Bills

Football - Buffalo Bills

Football - Carolina Panthers

Football - Carolina Panthers

Football - Chicago Bears

Football - Chicago Bears

Football - Cincinnati Bengals

Football - Cincinnati Bengals

Football - Cleveland Browns

Football - Cleveland Browns

Football - Dallas Cowboys

Football - Dallas Cowboys

Football - Denver Broncos

Football - Denver Broncos

Football - Detroit Lions

Football - Detroit Lions

Football - Green Bay Packers

Football - Green Bay Packers

Football - Houston Texans

Football - Houston Texans

Football - Indianapolis Colts

Football - Indianapolis Colts

Football - Jacksonville Jaguars

Football - Jacksonville Jaguars

Football - Kansas City Chiefs

Football - Kansas City Chiefs

Football - Miami Dolphins

Football - Miami Dolphins

Football - Minnesota Vikings

Football - Minnesota Vikings

Football - New England Patriots

Football - New England Patriots

Football - New Orleans Saints

Football - New Orleans Saints

Football - New York Giants

Football - New York Giants

Football - New York Jets

Football - New York Jets

Football - Oakland Raiders

Football - Oakland Raiders

Football - Philadelphia Eagles

Football - Philadelphia Eagles

Football - Pittsburgh Steelers

Football - Pittsburgh Steelers

Football - San Diego Chargers

Football - San Diego Chargers

Football - San Francisco 49ers

Football - San Francisco 49ers

Football - Seattle Seahawks

Football - Seattle Seahawks

Football - St. Louis Rams

Football - St. Louis Rams

Football - Tampa Bay Buccaneers

Football - Tampa Bay Buccaneers

Football - Tennessee Titans

Football - Tennessee Titans

Football - Washington Redskins

Football - Washington Redskins

Formula 1

Formula 1

Formula 1 - Force India Videos

Formula 1 - Force India Videos

Formula 1 - Infiniti Red Bull Racing Videos

Formula 1 - Infiniti Red Bull Racing Videos

Formula 1 - Live Stream & News

Formula 1 - Live Stream & News

Formula 1 - McLaren Videos

Formula 1 - McLaren Videos

Formula 1 - Mercedes AMG Petronas Videos

Formula 1 - Mercedes AMG Petronas Videos

Formula 1 - Sauber F1 Team Videos

Formula 1 - Sauber F1 Team Videos

Formula 1 - Scuderia Ferrari Videos

Formula 1 - Scuderia Ferrari Videos

Formula 1 - Scuderia Toro Rosso Videos

Formula 1 - Scuderia Toro Rosso Videos

Formula 1 - Team Lotus Videos

Formula 1 - Team Lotus Videos

Formula 1 - Williams Martini videos

Formula 1 - Williams Martini videos

Gael Monfils

Gael Monfils

Gilles Simon

Gilles Simon

Golf

Golf

Grigor Dimitrov

Grigor Dimitrov

Gymnastics

Gymnastics

Horse Racing

Horse Racing

Ice Hockey

Ice Hockey

Ice Hockey - Anaheim Ducks

Ice Hockey - Anaheim Ducks

Ice Hockey - Arizona Coyotes

Ice Hockey - Arizona Coyotes

Ice Hockey - Boston Bruins

Ice Hockey - Boston Bruins

Ice Hockey - Buffalo Sabres

Ice Hockey - Buffalo Sabres

Ice Hockey - Calgary Flames

Ice Hockey - Calgary Flames

Ice Hockey - Carolina Hurricanes

Ice Hockey - Carolina Hurricanes

Ice Hockey - Chicago Blackhawks

Ice Hockey - Chicago Blackhawks

Ice Hockey - Colorado Avalanche

Ice Hockey - Colorado Avalanche

Ice Hockey - Columbus Blue Jackets

Ice Hockey - Columbus Blue Jackets

Ice Hockey - Dallas Stars

Ice Hockey - Dallas Stars

Ice Hockey - Detroit Red Wings

Ice Hockey - Detroit Red Wings

Ice Hockey - Edmonton Oilers

Ice Hockey - Edmonton Oilers

Ice Hockey - Florida Panthers

Ice Hockey - Florida Panthers

Ice Hockey - Los Angeles Kings

Ice Hockey - Los Angeles Kings

Ice Hockey - Minnesota Wild

Ice Hockey - Minnesota Wild

Ice Hockey - Montreal Canadiens

Ice Hockey - Montreal Canadiens

Ice Hockey - Nashville Predators

Ice Hockey - Nashville Predators

Ice Hockey - New Jersey Devils

Ice Hockey - New Jersey Devils

Ice Hockey - New York Islanders

Ice Hockey - New York Islanders

Ice Hockey - New York Rangers

Ice Hockey - New York Rangers

Ice Hockey - Ottawa Senators

Ice Hockey - Ottawa Senators

Ice Hockey - Philadelphia Flyers

Ice Hockey - Philadelphia Flyers

Ice Hockey - Pittsburg Penguins

Ice Hockey - Pittsburg Penguins

Ice Hockey - San Jose Sharks

Ice Hockey - San Jose Sharks

Ice Hockey - St. Louis Blues

Ice Hockey - St. Louis Blues

Ice Hockey - Tampa Bay Lightning

Ice Hockey - Tampa Bay Lightning

Ice Hockey - Toronto Maple Leafs

Ice Hockey - Toronto Maple Leafs

Ice Hockey - Vancouver Canucks

Ice Hockey - Vancouver Canucks

Ice Hockey - Washington Capitals

Ice Hockey - Washington Capitals

Ice Hockey - Winnipeg Jets

Ice Hockey - Winnipeg Jets

Ivo Karlovic

Ivo Karlovic

Jeremy Chardy

Jeremy Chardy

Jo-Wilfried Tsonga

Jo-Wilfried Tsonga

John Isner

John Isner

Julien Benneteau

Julien Benneteau

Kei Nishikori

Kei Nishikori

Kevin Anderson

Kevin Anderson

Lacrosse

Lacrosse

Leonardo Mayer

Leonardo Mayer

Marin Cilic

Marin Cilic

Milos Raonic

Milos Raonic

MMA

MMA

Nascar

Nascar

NBA twitter top10

NBA twitter top10

NFL

NFL

Novak Djokovic

Novak Djokovic

Pablo Cuevas

Pablo Cuevas

Philipp Kohlschreiber

Philipp Kohlschreiber

Rafael Nadal

Rafael Nadal

Richard Gasquet

Richard Gasquet

Roberto Bautista Agut

Roberto Bautista Agut

Roger Federer

Roger Federer

Skateboarding

Skateboarding

Skiing

Skiing

Soccer - Atlanta United FC

Soccer - Atlanta United FC

Soccer - Chicago Fire

Soccer - Chicago Fire

Soccer - Colorado Rapids

Soccer - Colorado Rapids

Soccer - Columbus Crew

Soccer - Columbus Crew

Soccer - D.C. United

Soccer - D.C. United

Soccer - FC Dallas

Soccer - FC Dallas

Soccer - Houston Dynamo

Soccer - Houston Dynamo

Soccer - LA Galaxy

Soccer - LA Galaxy

Soccer - Los Angeles Football Club

Soccer - Los Angeles Football Club

Soccer - Major League

Soccer - Major League

Soccer - Montreal Impact

Soccer - Montreal Impact

Soccer - New England Revolution

Soccer - New England Revolution

Soccer - New York City FC

Soccer - New York City FC

Soccer - New York Red Bulls

Soccer - New York Red Bulls

Soccer - Orlando City SC

Soccer - Orlando City SC

Soccer - Philadelphia Union

Soccer - Philadelphia Union

Soccer - Portland Timbers

Soccer - Portland Timbers

Soccer - Real Salt Lake

Soccer - Real Salt Lake

Soccer - San Jose Earthquakes

Soccer - San Jose Earthquakes

Soccer - Seattle Sounders FC

Soccer - Seattle Sounders FC

Soccer - Sporting Kansas City

Soccer - Sporting Kansas City

Soccer - Toronto FC

Soccer - Toronto FC

Soccer - United States Soccer Federation

Soccer - United States Soccer Federation

Soccer - Vancouver Whitecaps FC

Soccer - Vancouver Whitecaps FC

Stanislas Wawrinka

Stanislas Wawrinka

Swimming

Swimming

Tennis

Tennis

Tomas Berdych

Tomas Berdych

Tommy Robredo

Tommy Robredo

Top 30 tennis players

Top 30 tennis players

Volleyball

Volleyball

World Cup soccer

World Cup soccer

World Soccer

World Soccer

Wrestling

Wrestling

✗ Close categories

✗ Close categories

✗ Close categories

Akron

Akron

Alabama

Alabama

Alaska

Alaska

Albuquerque

Albuquerque

Anaheim

Anaheim

Anchorage

Anchorage

Arizona

Arizona

Arkansas

Arkansas

Arlington

Arlington

Atlanta

Atlanta

Aurora

Aurora

Austin

Austin

Bakersfield

Bakersfield

Baltimore

Baltimore

Baton Rouge

Baton Rouge

Birmingham

Birmingham

Boston

Boston

Buffalo

Buffalo

California

California

Chandler

Chandler

Charlotte

Charlotte

Chesapeake

Chesapeake

Chicago

Chicago

Chula Vista

Chula Vista

Cincinnati

Cincinnati

Cleveland

Cleveland

Colorado

Colorado

Colorado Springs

Colorado Springs

Columbus

Columbus

Connecticut

Connecticut

Corpus Christi

Corpus Christi

Dallas

Dallas

Delaware

Delaware

Denver

Denver

Detroit

Detroit

Durham

Durham

El Paso

El Paso

Florida

Florida

Fort Wayne

Fort Wayne

Fort Worth

Fort Worth

Fremont

Fremont

Fresno

Fresno

Garland

Garland

Georgia

Georgia

Glendale

Glendale

Greensboro

Greensboro

Hawaii

Hawaii

Henderson

Henderson

Hialeah

Hialeah

Hollywood

Hollywood

Honolulu

Honolulu

Houston

Houston

Idaho

Idaho

Illinois

Illinois

Indiana

Indiana

Indianapolis

Indianapolis

Iowa

Iowa

Jacksonville

Jacksonville

Jersey City

Jersey City

Kansas

Kansas

Kansas City

Kansas City

Kentucky

Kentucky

Laredo

Laredo

Las Vegas

Las Vegas

Lexington

Lexington

Lincoln

Lincoln

Long Beach

Long Beach

Los Angeles

Los Angeles

Louisiana

Louisiana

Louisville

Louisville

Lubbock

Lubbock

Madison

Madison

Maine

Maine

Maryland

Maryland

Massachusetts

Massachusetts

Memphis

Memphis

Mesa

Mesa

Miami

Miami

Michigan

Michigan

Milwaukee

Milwaukee

Minneapolis

Minneapolis

Minnesota

Minnesota

Mississippi

Mississippi

Missouri

Missouri

Modesto

Modesto

Montana

Montana

Montgomery

Montgomery

Nashville

Nashville

Nebraska

Nebraska

Nevada

Nevada

New Hampshire

New Hampshire

New Jersey

New Jersey

New Mexico

New Mexico

New Orleans

New Orleans

New York

New York

Newark

Newark

Norfolk

Norfolk

North Carolina

North Carolina

North Dakota

North Dakota

NY Bronx

NY Bronx

NY Brooklyn

NY Brooklyn

NY Chinatown

NY Chinatown

NY Manhattan

NY Manhattan

NY Queens

NY Queens

NY Staten Island

NY Staten Island

Oakland

Oakland

Ohio

Ohio

Oklahoma

Oklahoma

Oklahoma City

Oklahoma City

Omaha

Omaha

Oregon

Oregon

Orlando

Orlando

Pennsylvania

Pennsylvania

Philadelphia

Philadelphia

Phoenix

Phoenix

Pittsburgh

Pittsburgh

Plano

Plano

Portland

Portland

Raleigh

Raleigh

Reno

Reno

Rhode Island

Rhode Island

Riverside

Riverside

Rochester

Rochester

Sacramento

Sacramento

Saint Louis

Saint Louis

Saint Paul

Saint Paul

Saint Petersburg

Saint Petersburg

San Antonio

San Antonio

San Diego

San Diego

San Francisco

San Francisco

San Jose

San Jose

Santa Ana

Santa Ana

Scottsdale

Scottsdale

Seattle

Seattle

Shreveport

Shreveport

South Carolina

South Carolina

South Dakota

South Dakota

Stockton

Stockton

Tampa

Tampa

Tennessee

Tennessee

Texas

Texas

Toledo

Toledo

Tulsa

Tulsa

Tuscon

Tuscon

Utah

Utah

Vermont

Vermont

Virginia

Virginia

Virginia Beach

Virginia Beach

Washington

Washington

West Virginia

West Virginia

Wichita

Wichita

Wisconsin

Wisconsin

Wyoming

Wyoming

✗ Close categories

✗ Close categories

✗ Close categories

Alternative rock

Alternative rock

Blues

Blues

Classic Rock & Pop

Classic Rock & Pop

Country news

Country news

Country videos

Country videos

Dance news

Dance news

Dance videos

Dance videos

Dubstep news & videos

Dubstep news & videos

EDM

EDM

Heavy Metal

Heavy Metal

Hip-Hop news

Hip-Hop news

Hip-Hop videos

Hip-Hop videos

Jazz

Jazz

Latin

Latin

Minimal Techno

Minimal Techno

New EDM, Trance, Hip hop and R&B Music

New EDM, Trance, Hip hop and R&B Music

Pop videos

Pop videos

R&B Hip-Hop news

R&B Hip-Hop news

R&B Hip-Hop videos

R&B Hip-Hop videos

R&B News

R&B News

R&B videos

R&B videos

Reggae

Reggae

Retro Trance & Dance Music

Retro Trance & Dance Music

Rock

Rock

Soul

Soul

Soundrange Dub Rebel

Soundrange Dub Rebel

Spinnin' Records

Spinnin' Records

Techno News & mixes

Techno News & mixes

Trance Music

Trance Music

Trance news

Trance news

Urban & Caribbean

Urban & Caribbean

✗ Close categories

✗ Close categories

✗ Close categories

Aaron Rodgers

Aaron Rodgers

Adam Sandler

Adam Sandler

Adele

Adele

Al Pacino

Al Pacino

Amanda Seyfried

Amanda Seyfried

Amy Adams

Amy Adams

Angelina Jolie

Angelina Jolie

Ashley Judd

Ashley Judd

Ashton Kutcher

Ashton Kutcher

Barack Obama

Barack Obama

Ben Affleck

Ben Affleck

Beyoncé

Beyoncé

Big Sean

Big Sean

Bill Gates

Bill Gates

Bill Murray

Bill Murray

Billie Eilish

Billie Eilish

Bon Jovi

Bon Jovi

Brad Pitt

Brad Pitt

Bradley Cooper

Bradley Cooper

Brendan Jordan

Brendan Jordan

Britney Spears

Britney Spears

Bruce Springsteen

Bruce Springsteen

Bruce Willis

Bruce Willis

Bruno Mars

Bruno Mars

Bryan Cranston

Bryan Cranston

Busta Rhymes

Busta Rhymes

Cameron Diaz

Cameron Diaz

Catherine Zeta-Jones

Catherine Zeta-Jones

Channing Tatum

Channing Tatum

Charlie Sheen

Charlie Sheen

Chris Hemsworth

Chris Hemsworth

Christina Aguilera

Christina Aguilera

Christoph Waltz

Christoph Waltz

Danny Trejo

Danny Trejo

David Beckham

David Beckham

David Hasselhoff

David Hasselhoff

David Letterman

David Letterman

Denzel Washington

Denzel Washington

Derek Jeter

Derek Jeter

DJ 3lau

DJ 3lau

DJ Above & Beyond

DJ Above & Beyond

DJ Afrojack

DJ Afrojack

DJ Alesso

DJ Alesso

DJ Aly & Fila

DJ Aly & Fila

DJ Andrew Rayel

DJ Andrew Rayel

DJ Angerfist

DJ Angerfist

DJ Armin Van Buuren

DJ Armin Van Buuren

DJ Arty

DJ Arty

DJ ATB

DJ ATB

DJ Audien

DJ Audien

DJ Avicii

DJ Avicii

DJ Axwell

DJ Axwell

DJ Bingo Players

DJ Bingo Players

DJ Bl3ND

DJ Bl3ND

DJ Blasterjaxx

DJ Blasterjaxx

DJ Borgeous

DJ Borgeous

DJ Borgore

DJ Borgore

DJ Boy George

DJ Boy George

DJ Brennan Heart

DJ Brennan Heart

DJ Calvin Harris

DJ Calvin Harris

DJ Carl Cox

DJ Carl Cox

DJ Carnage

DJ Carnage

DJ Code Black

DJ Code Black

DJ Coone

DJ Coone

DJ Cosmic Gate

DJ Cosmic Gate

DJ Da Tweekaz

DJ Da Tweekaz

DJ Dada Life

DJ Dada Life

DJ Daft Punk

DJ Daft Punk

DJ Dannic

DJ Dannic

DJ Dash Berlin

DJ Dash Berlin

DJ David Guetta

DJ David Guetta

DJ Deadmau5

DJ Deadmau5

DJ Deorro

DJ Deorro

DJ Diego Miranda

DJ Diego Miranda

DJ Dillon Francis

DJ Dillon Francis

DJ Dimitri Vegas & Like Mike

DJ Dimitri Vegas & Like Mike

DJ Diplo

DJ Diplo

DJ Don Diablo

DJ Don Diablo

DJ DVBBS

DJ DVBBS

DJ Dyro

DJ Dyro

DJ Eric Prydz

DJ Eric Prydz

Dj Fabian (DJ Fabvd M)

Dj Fabian (DJ Fabvd M)

DJ Fedde Le Grand

DJ Fedde Le Grand

DJ Felguk

DJ Felguk

DJ Ferry Corsten

DJ Ferry Corsten

DJ Firebeatz

DJ Firebeatz

DJ Frontliner

DJ Frontliner

DJ Gabry Ponte

DJ Gabry Ponte

DJ Gareth Emery

DJ Gareth Emery

DJ Hardwell

DJ Hardwell

DJ Headhunterz

DJ Headhunterz

DJ Heatbeat

DJ Heatbeat

DJ Infected Mushroom

DJ Infected Mushroom

DJ John O'Callaghan

DJ John O'Callaghan

DJ Kaskade

DJ Kaskade

DJ Knife Party

DJ Knife Party

DJ Krewella

DJ Krewella

DJ Kura

DJ Kura

DJ Laidback Luke

DJ Laidback Luke

DJ Madeon

DJ Madeon

DJ MAKJ

DJ MAKJ

DJ Markus Schulz

DJ Markus Schulz

DJ Martin Garrix

DJ Martin Garrix

DJ Merk & Kremont

DJ Merk & Kremont

DJ Mike Candys

DJ Mike Candys

DJ Nervo

DJ Nervo

DJ Nicky Romero

DJ Nicky Romero

DJ Noisecontrollers

DJ Noisecontrollers

DJ Oliver Heldens

DJ Oliver Heldens

DJ Orjan Nilsen

DJ Orjan Nilsen

DJ Paul Van Dyk

DJ Paul Van Dyk

DJ Porter Robinson

DJ Porter Robinson

DJ Quentin Mosimann

DJ Quentin Mosimann

DJ Quintino

DJ Quintino

DJ R3hab

DJ R3hab

DJ Radical Redemption

DJ Radical Redemption

DJ Richie Hawtin

DJ Richie Hawtin

DJ Sander Van Doorn

DJ Sander Van Doorn

DJ Sebastian Ingrosso

DJ Sebastian Ingrosso

DJ Showtek

DJ Showtek

DJ Skrillex

DJ Skrillex

DJ Snake

DJ Snake

DJ Steve Angello

DJ Steve Angello

DJ Steve Aoki

DJ Steve Aoki

DJ Tenishia

DJ Tenishia

DJ The Chainsmokers

DJ The Chainsmokers

DJ Tiddey

DJ Tiddey

DJ Tiesto

DJ Tiesto

DJ TJR

DJ TJR

DJ Umek

DJ Umek

DJ Ummet Ozcan

DJ Ummet Ozcan

DJ Vicetone

DJ Vicetone

DJ VINAI

DJ VINAI

DJ W&W

DJ W&W

DJ Wildstylez

DJ Wildstylez

DJ Wolfpack

DJ Wolfpack

DJ Yves V

DJ Yves V

DJ Zatox

DJ Zatox

DJ Zedd

DJ Zedd

DJ Zomboy

DJ Zomboy

DMX

DMX

Donald Trump

Donald Trump

Dr. Dre

Dr. Dre

Dr. Phil McGraw

Dr. Phil McGraw

Drew Brees

Drew Brees

Dwayne Johnson

Dwayne Johnson

Dwyane Wade

Dwyane Wade

Eddie Murphy

Eddie Murphy

Edward Snowden

Edward Snowden

Ellen DeGeneres

Ellen DeGeneres

Emily Mortimer

Emily Mortimer

Eminem

Eminem

Emma Thompson

Emma Thompson

Famke Janssen

Famke Janssen

Farrah Fawcett

Farrah Fawcett

Floyd Mayweather

Floyd Mayweather

Floyd Mayweather- Boxing

Floyd Mayweather- Boxing

Franco Nero

Franco Nero

Gabby Douglas

Gabby Douglas

Gabriel Macht

Gabriel Macht

Garth Brooks

Garth Brooks

Gemma Arterton

Gemma Arterton

George Clooney

George Clooney

George Pal

George Pal

Gisele Bundchen

Gisele Bundchen

Glenn Beck

Glenn Beck

Golshifteh Farahani

Golshifteh Farahani

Greg Giraldo

Greg Giraldo

Gwyneth Paltrow

Gwyneth Paltrow

Howard Stern

Howard Stern

Hulk Hogan

Hulk Hogan

Ice Cube

Ice Cube

J.J. Abrams

J.J. Abrams

Jack Nicholson

Jack Nicholson

James Caviezel

James Caviezel

James Patterson

James Patterson

Jane Fonda

Jane Fonda

Jason Aldean

Jason Aldean

Jason Bateman

Jason Bateman

Jason London

Jason London

Jason Schwartzman

Jason Schwartzman

Jason Statham

Jason Statham

Jay Leno

Jay Leno

Jay-Z

Jay-Z

Jeananne Goossen

Jeananne Goossen

Jennifer Aniston

Jennifer Aniston

Jennifer Garner

Jennifer Garner

Jennifer Lawrence

Jennifer Lawrence

Jennifer Lopez

Jennifer Lopez

Jeremy Renner

Jeremy Renner

Jerry Seinfeld

Jerry Seinfeld

Jessica Alba

Jessica Alba

Jessica Chastain

Jessica Chastain

Jim Carrey

Jim Carrey

Jim Parsons

Jim Parsons

Jimmy Buffett

Jimmy Buffett

Jimmy Fallon

Jimmy Fallon

Joel Zimmerman

Joel Zimmerman

John Green

John Green

John Malkovich

John Malkovich

Johnny Depp

Johnny Depp

Jon Hamm

Jon Hamm

Jon Lester

Jon Lester

Jon Stewart

Jon Stewart

Joss Whedon

Joss Whedon

Jude Law

Jude Law

Judy Sheindlin

Judy Sheindlin

Julia Roberts

Julia Roberts

Justin Bieber

Justin Bieber

Justin Timberlake

Justin Timberlake

Kaley Cuoco

Kaley Cuoco

Kanye West

Kanye West

Kate Upton

Kate Upton

Kathleen Turner

Kathleen Turner

Katy Perry

Katy Perry

Kelly Lynch

Kelly Lynch

Kenny Chesney

Kenny Chesney

Kerry Washington

Kerry Washington

Kevin Durant

Kevin Durant

Kevin Hart

Kevin Hart

Kevin Spacey

Kevin Spacey

Kim Kardashian

Kim Kardashian

Kobe Bryant

Kobe Bryant

Kristen Stewart

Kristen Stewart

Lady Gaga

Lady Gaga

LeBron James

LeBron James

Leonardo DiCaprio

Leonardo DiCaprio

Lil Wayne

Lil Wayne

Lindsay Lohan

Lindsay Lohan

Lucas Black

Lucas Black

Luke Bryan

Luke Bryan

Madonna

Madonna

Mahendra Singh Dhoni

Mahendra Singh Dhoni

Maksim Vitorgan

Maksim Vitorgan

Manny Pacquiao

Manny Pacquiao

Maria Sharapova

Maria Sharapova

Marisa Tomei

Marisa Tomei

Mark Harmon

Mark Harmon

Mark Wahlberg

Mark Wahlberg

Mark Zuckerberg

Mark Zuckerberg

Martin Sheen

Martin Sheen

Mary-Louise Parker

Mary-Louise Parker

Matt Damon

Matt Damon

Matthew McConaughey

Matthew McConaughey

Megan Fox

Megan Fox

Meryl Streep

Meryl Streep

Method Man

Method Man

Michael Bay

Michael Bay

Michael Jordan

Michael Jordan

Michael Phelps

Michael Phelps

Mickey Rourke

Mickey Rourke

Mike Tyson & Boxing

Mike Tyson & Boxing

Miley Cyrus

Miley Cyrus

Morgan Freeman

Morgan Freeman

Mos Def

Mos Def

Naomi Watts

Naomi Watts

Natalie Portman

Natalie Portman

Natalya Rudakova

Natalya Rudakova

Neil Patrick Harris

Neil Patrick Harris

Nicolas Cage

Nicolas Cage

Nicole Kidman

Nicole Kidman

Novak Djokovic

Novak Djokovic

One Direction- Band

One Direction- Band

Oprah Winfrey

Oprah Winfrey

P!nk

P!nk

Paige Simpson

Paige Simpson

Pamela Anderson

Pamela Anderson

Patricia Arquette

Patricia Arquette

Patrick Wilson

Patrick Wilson

Paul Rudd

Paul Rudd

Peter Billingsley

Peter Billingsley

Peyton Manning

Peyton Manning

Pharrell Williams

Pharrell Williams

Phil Mickelson

Phil Mickelson

Philip Seymour Hoffman

Philip Seymour Hoffman

Rafael Nadal

Rafael Nadal

Rihanna

Rihanna

Robert De Niro

Robert De Niro

Robert Downey Jr

Robert Downey Jr

Robert Pattinson

Robert Pattinson

Robert Redford

Robert Redford

Roger Federer

Roger Federer

Roman Coppola

Roman Coppola

Ron Howard

Ron Howard

Ron Jeremy

Ron Jeremy

Rush Limbaugh

Rush Limbaugh

Ryan Seacrest

Ryan Seacrest

Sandra Bullock

Sandra Bullock

Sarah Jessica Parker

Sarah Jessica Parker

Sarah Shahi

Sarah Shahi

Scarlett Johansson

Scarlett Johansson

Sean "Diddy" Combs

Sean "Diddy" Combs

Sean Hannity

Sean Hannity

Sean Penn

Sean Penn

Selena Gomez

Selena Gomez

Serena Williams

Serena Williams

Seth MacFarlane

Seth MacFarlane

Seth Rogen

Seth Rogen

Shakira

Shakira

Shia LaBeouf

Shia LaBeouf

Skylar Astin

Skylar Astin

Snoop Dogg

Snoop Dogg

Sofia Vergara

Sofia Vergara

Stacey Dash

Stacey Dash

Stephen King

Stephen King

Steven Spielberg

Steven Spielberg

Sylvester Stallone

Sylvester Stallone

Taylor Swift

Taylor Swift

The Eagles- Band

The Eagles- Band

Tiger Woods

Tiger Woods

Tim Tebow

Tim Tebow

Toby Keith

Toby Keith

Tom Cruise

Tom Cruise

Tom Hanks

Tom Hanks

Tom Hardy

Tom Hardy

Tommy Lee Jones

Tommy Lee Jones

Tyler Perry

Tyler Perry

Usain Bolt

Usain Bolt

Veronica Roth

Veronica Roth

Vin Diesel

Vin Diesel

Vince Vaughn

Vince Vaughn

Vincent D'Onofrio

Vincent D'Onofrio

Viola Davis

Viola Davis

Warren Buffet

Warren Buffet

Wesley Snipes

Wesley Snipes

Will Ferrell

Will Ferrell

Will Smith

Will Smith

William Shatner

William Shatner

Zooey Deschanel

Zooey Deschanel

✗ Close categories

✗ Close categories

✗ Close categories

Accountancy

Accountancy

Administration

Administration

Advertising

Advertising

Aerospace

Aerospace

Agriculture

Agriculture

Analyst

Analyst

Animals

Animals

Antique

Antique

Archaeology

Archaeology

Architecture

Architecture

Arts

Arts

Astrology

Astrology

Astronomy

Astronomy

Automotive

Automotive

Aviation

Aviation

Bakery

Bakery

Biotechnology

Biotechnology

Cabaret

Cabaret

Call center

Call center

Car News

Car News

Care

Care

Catering

Catering

Charities

Charities

Chemistry

Chemistry

Child care

Child care

Cinema, Theater & TV

Cinema, Theater & TV

Cleaning industry

Cleaning industry

Coaching

Coaching

Construction

Construction

Customs

Customs

Dairy industry

Dairy industry

Dance & ballet

Dance & ballet

Debt collection agencies

Debt collection agencies

Defense

Defense

Dj

Dj

Economy

Economy

Education & Training

Education & Training

Entrepreneur

Entrepreneur

Financial

Financial

Firefighter

Firefighter

Fisheries

Fisheries

Flowers

Flowers

FMCG

FMCG

Food

Food

Fruit & Vegetables

Fruit & Vegetables

Gardener

Gardener

Genealogy

Genealogy

General News

General News

Government

Government

Hair stylist

Hair stylist

Hotel

Hotel

HR & Recruitment

HR & Recruitment

ICT

ICT

Insurance

Insurance

IT Executive

IT Executive

Jobs

Jobs

Justice

Justice

Lawyer

Lawyer

Legal

Legal

Library

Library

Logistics

Logistics

Marketing

Marketing

Meat industry

Meat industry

Medical Industry

Medical Industry

Mining

Mining

Nurse

Nurse

Online Trends

Online Trends

Painter

Painter

Pharmaceutical

Pharmaceutical

Pharmacy

Pharmacy

Photography

Photography

Physical therapy

Physical therapy

Police

Police

Political

Political

PR Public relations

PR Public relations

Production & Industry

Production & Industry

Project Management

Project Management

Psychology

Psychology

Public Transport

Public Transport

Publisher

Publisher

Real estate

Real estate

Rehab

Rehab

Research & Development

Research & Development

Restaurant

Restaurant

Retail

Retail

Sales

Sales

Security

Security

SEO

SEO

Shipping

Shipping

Social work

Social work

Sustainable Energy

Sustainable Energy

Teacher

Teacher

Telecom

Telecom

Tourism

Tourism

Traditional Energy

Traditional Energy

Transport

Transport

Travel Industry

Travel Industry

Vacancies

Vacancies

Vegetables

Vegetables

Web Design

Web Design

✗ Close categories

✗ Close categories

✗ Close categories

$ABBC ABBC Coin

$ABBC ABBC Coin

$ABT Arcblock

$ABT Arcblock

$ADA Cardano

$ADA Cardano

$ADK Aidos Kuneen

$ADK Aidos Kuneen

$AE Aeternity

$AE Aeternity

$AGVC AgaveCoin

$AGVC AgaveCoin

$AION Aion

$AION Aion

$ALGO Algorand

$ALGO Algorand

$ANT Aragon

$ANT Aragon

$ARDR Ardor

$ARDR Ardor

$ARK Ark

$ARK Ark

$ATOM Cosmos

$ATOM Cosmos

$BASE Base Protocol

$BASE Base Protocol

$BAT Basic Attention Token

$BAT Basic Attention Token

$BCD Bitcoin Diamond

$BCD Bitcoin Diamond

$BCH Bitcoin Cash

$BCH Bitcoin Cash

$BCN Bytecoin

$BCN Bytecoin

$BCZERO Buggyra Coin Zero

$BCZERO Buggyra Coin Zero

$BDX Beldex

$BDX Beldex

$BEAM Beam

$BEAM Beam

$BF BitForex Token

$BF BitForex Token

$BHD BitcoinHD

$BHD BitcoinHD

$BHP BHPCoin

$BHP BHPCoin

$BIX Bibox Token

$BIX Bibox Token

$BLOCK Blocknet

$BLOCK Blocknet

$BNB Binance Coin

$BNB Binance Coin

$BNK Bankera

$BNK Bankera

$BNT Bancor

$BNT Bancor

$BOA BOSAGORA

$BOA BOSAGORA

$BONDLY Bondly

$BONDLY Bondly

$BOTX botXcoin

$BOTX botXcoin

$BRD Bread

$BRD Bread

$BRZE Breezecoin

$BRZE Breezecoin

$BST BlockStamp

$BST BlockStamp

$BSV Bitcoin SV

$BSV Bitcoin SV

$BTC Bitcoin

$BTC Bitcoin

$BTC2 Bitcoin 2

$BTC2 Bitcoin 2

$BTG Bitcoin Gold

$BTG Bitcoin Gold

$BTM Bytom

$BTM Bytom

$BTMX BitMax Token

$BTMX BitMax Token

$BTS BitShares

$BTS BitShares

$BTT BitTorrent

$BTT BitTorrent

$BUSD Binance USD

$BUSD Binance USD

$BXK Bitbook Gambling

$BXK Bitbook Gambling

$CCA Counos Coin

$CCA Counos Coin

$CENNZ Centrality

$CENNZ Centrality

$CHSB SwissBorg

$CHSB SwissBorg

$CHZ Chiliz

$CHZ Chiliz

$CIX100 Cryptoindex.com 100

$CIX100 Cryptoindex.com 100

$CKB Nervos Network

$CKB Nervos Network

$CNX Cryptonex

$CNX Cryptonex

$CRO Crypto.com Coin

$CRO Crypto.com Coin

$CRPT Crypterium

$CRPT Crypterium

$CSC CasinoCoin

$CSC CasinoCoin

$CTXC Cortex

$CTXC Cortex

$CVC Civic

$CVC Civic

$DAG Constellation

$DAG Constellation

$DAI Multi-collateral DAI

$DAI Multi-collateral DAI

$DASH Dash

$DASH Dash

$DCN Dentacoin

$DCN Dentacoin

$DCR Decred

$DCR Decred

$DDIM DuckDAO Dime

$DDIM DuckDAO Dime

$DENT Dent

$DENT Dent

$DGB DigiByte

$DGB DigiByte

$DGD DigixDAO

$DGD DigixDAO

$DGTX Digitex Futures

$DGTX Digitex Futures

$DIVI Divi

$DIVI Divi

$DMT DMarket

$DMT DMarket

$DOGE Dogecoin

$DOGE Dogecoin

$DPT Diamond Platform Token

$DPT Diamond Platform Token

$DRG Dragon Coins

$DRG Dragon Coins

$DTR Dynamic Trading Rights

$DTR Dynamic Trading Rights

$DUCK Duck Liquidity Pool

$DUCK Duck Liquidity Pool

$DX DxChain Token

$DX DxChain Token

$ECOREAL Ecoreal Estate

$ECOREAL Ecoreal Estate

$EDC EDC Blockchain

$EDC EDC Blockchain

$EKT EDUCare

$EKT EDUCare

$ELA Elastos

$ELA Elastos

$ELF aelf

$ELF aelf

$ENG Enigma

$ENG Enigma

$ENJ Enjin Coin

$ENJ Enjin Coin

$EON Dimension Chain

$EON Dimension Chain

$EOS EOS

$EOS EOS

$ERD Elrond

$ERD Elrond

$ETC Ethereum Classic

$ETC Ethereum Classic

$ETH Ethereum

$ETH Ethereum

$ETN Electroneum

$ETN Electroneum

$ETP Metaverse ETP

$ETP Metaverse ETP

$EURS STASIS EURO

$EURS STASIS EURO

$EVR Everus

$EVR Everus

$FAB FABRK

$FAB FABRK

$FCT Factom

$FCT Factom

$FET Fetch.ai

$FET Fetch.ai

$FLG Folgory Coin

$FLG Folgory Coin

$FST 1irstcoin

$FST 1irstcoin

$FTM Fantom

$FTM Fantom

$FTT FTX Token

$FTT FTX Token

$FUN FunFair

$FUN FunFair

$FXC Flexacoin

$FXC Flexacoin

$GAP GAPS

$GAP GAPS

$GNO Gnosis

$GNO Gnosis

$GNT Golem

$GNT Golem

$GO GoChain

$GO GoChain

$GRIN Grin

$GRIN Grin

$GRS Groestlcoin

$GRS Groestlcoin

$GT Gatechain Token

$GT Gatechain Token

$GTN GlitzKoin

$GTN GlitzKoin

$GXC GXChain

$GXC GXChain

$HBAR Hedera Hashgraph

$HBAR Hedera Hashgraph

$HC HyperCash

$HC HyperCash

$HEDG HedgeTrade

$HEDG HedgeTrade

$HOT Holo

$HOT Holo

$HPT Huobi Pool Token

$HPT Huobi Pool Token

$HT Huobi Token

$HT Huobi Token

$HYN Hyperion

$HYN Hyperion

$ICX ICON

$ICX ICON

$IGNIS Ignis

$IGNIS Ignis

$ILC ILCoin

$ILC ILCoin

$INB Insight Chain

$INB Insight Chain

$INO INO COIN

$INO INO COIN

$IOST IOST

$IOST IOST

$IOTX IoTeX

$IOTX IoTeX

$JUL Joule

$JUL Joule

$JUP Jupiter

$JUP Jupiter

$KAN BitKan

$KAN BitKan

$KBC Karatgold Coin

$KBC Karatgold Coin

$KCS KuCoin Shares

$KCS KuCoin Shares

$KICK KickToken

$KICK KickToken

$KMD Komodo

$KMD Komodo

$KNC Kyber Network

$KNC Kyber Network

$LA LATOKEN

$LA LATOKEN

$LAMB Lambda

$LAMB Lambda

$LBA Cred

$LBA Cred

$LEND Aave

$LEND Aave

$LEO UNUS SED LEO

$LEO UNUS SED LEO

$LINA Linear Finance

$LINA Linear Finance

$LINK Chainlink

$LINK Chainlink

$LOKI Loki

$LOKI Loki

$LOOM Loom Network

$LOOM Loom Network

$LRC Loopring

$LRC Loopring

$LSK Lisk

$LSK Lisk

$LTC Litecoin

$LTC Litecoin

$LUNA Terra

$LUNA Terra

$MAID MaidSafeCoin

$MAID MaidSafeCoin

$MANA Decentraland

$MANA Decentraland

$MATIC Matic Network

$MATIC Matic Network

$MB MineBee

$MB MineBee

$MCO MCO

$MCO MCO

$MDA Moeda Loyalty Points

$MDA Moeda Loyalty Points

$MIN MINDOL

$MIN MINDOL

$MIOTA IOTA

$MIOTA IOTA

$MKR Maker

$MKR Maker

$MOF Molecular Future

$MOF Molecular Future

$MONA MonaCoin

$MONA MonaCoin

$MTL Metal

$MTL Metal

$MX MX Token

$MX MX Token

$NANO Nano

$NANO Nano

$NAS Nebulas

$NAS Nebulas

$NEO Neo

$NEO Neo

$NET NEXT

$NET NEXT

$NEW Newton

$NEW Newton

$NEX Nash Exchange

$NEX Nash Exchange

$NEXO Nexo

$NEXO Nexo

$NMR Numeraire

$NMR Numeraire

$NOAH Noah Coin

$NOAH Noah Coin

$NPXS Pundi X

$NPXS Pundi X

$NRG Energi

$NRG Energi

$NULS NULS

$NULS NULS

$NXT Nxt

$NXT Nxt

$OCEAN Ocean Protocol

$OCEAN Ocean Protocol

$OKB OKB

$OKB OKB

$OMG OmiseGO

$OMG OmiseGO

$ONE BigONE Token

$ONE BigONE Token

$ONE Harmony

$ONE Harmony

$ONT Ontology

$ONT Ontology

$PAI Project Pai

$PAI Project Pai

$PAX Paxos Standard

$PAX Paxos Standard

$PIVX PIVX

$PIVX PIVX

$PLC PLATINCOIN

$PLC PLATINCOIN

$POOLZ Poolz

$POOLZ Poolz

$POWR Power Ledger

$POWR Power Ledger

$PPAY PlasmaPay

$PPAY PlasmaPay

$PPT Populous

$PPT Populous

$PZM PRIZM

$PZM PRIZM

$QASH QASH

$QASH QASH

$QBIT Qubitica

$QBIT Qubitica

$QC QCash

$QC QCash

$QNT Quant

$QNT Quant

$QTUM Qtum

$QTUM Qtum

$R Revain

$R Revain

$RCN Ripio Credit Network

$RCN Ripio Credit Network

$RDD ReddCoin

$RDD ReddCoin

$REN Ren

$REN Ren

$REP Augur

$REP Augur

$RIF RIF Token

$RIF RIF Token

$RLC iExec RLC

$RLC iExec RLC

$ROX Robotina

$ROX Robotina

$RUNE THORChain

$RUNE THORChain

$RVN Ravencoin

$RVN Ravencoin

$SAI Single Collateral DAI

$SAI Single Collateral DAI

$SC Siacoin

$SC Siacoin

$SEELE Seele

$SEELE Seele

$SNT Status

$SNT Status

$SNX Synthetix Network Token

$SNX Synthetix Network Token

$SOLVE SOLVE

$SOLVE SOLVE

$STEEM Steem

$STEEM Steem

$STORJ Storj

$STORJ Storj

$STRAT Stratis

$STRAT Stratis

$STREAM Streamit Coin

$STREAM Streamit Coin

$STX Blockstack

$STX Blockstack

$SXP Swipe

$SXP Swipe

$SYS Syscoin

$SYS Syscoin

$TAGZ5 TAGZ5

$TAGZ5 TAGZ5

$THETA THETA

$THETA THETA

$THR ThoreCoin

$THR ThoreCoin

$THX ThoreNext

$THX ThoreNext

$TNT Tierion

$TNT Tierion

$TOMO TomoChain

$TOMO TomoChain

$TRAT Tratin

$TRAT Tratin

$TRUE TrueChain

$TRUE TrueChain

$TRX TRON

$TRX TRON

$TT Thunder Token

$TT Thunder Token

$TUSD TrueUSD

$TUSD TrueUSD

$UBT Unibright

$UBT Unibright

$USDC USD Coin

$USDC USD Coin

$USDK USDK

$USDK USDK

$USDT Tether

$USDT Tether

$VEST VestChain

$VEST VestChain

$VET VeChain

$VET VeChain

$VITAE Vitae

$VITAE Vitae

$VLX Velas

$VLX Velas

$VSYS V Systems

$VSYS V Systems

$VTC Vertcoin

$VTC Vertcoin

$WAN Wanchain

$WAN Wanchain

$WAVES Waves

$WAVES Waves

$WAXP WAX

$WAXP WAX

$WICC WaykiChain

$WICC WaykiChain

$WIN WINk

$WIN WINk

$WTC Waltonchain

$WTC Waltonchain

$WXT Wirex Token

$WXT Wirex Token

$XEM NEM

$XEM NEM

$XET ETERNAL TOKEN

$XET ETERNAL TOKEN

$XIN Mixin

$XIN Mixin

$XLM Stellar

$XLM Stellar

$XMR Monero

$XMR Monero

$XMX XMax

$XMX XMax

$XRP XRP

$XRP XRP

$XTP Tap

$XTP Tap

$XTZ Tezos

$XTZ Tezos

$XVG Verge

$XVG Verge

$XZC Zcoin

$XZC Zcoin

$YAP Yap Stone

$YAP Yap Stone

$YOU YOU COIN

$YOU YOU COIN

$ZB ZB Token

$ZB ZB Token

$ZEC Zcash

$ZEC Zcash

$ZEN Horizen

$ZEN Horizen

$ZIL Zilliqa

$ZIL Zilliqa

$ZRX 0x

$ZRX 0x

Binance

Binance

Bittrex

Bittrex

HitBTC

HitBTC

Huobi

Huobi

Kraken

Kraken

Kucoin

Kucoin

✗ Close categories

✗ Close categories